时隔差不多三个月,繁忙中又回顾了 Mesos,发现真是忘记了很多。因此写下这篇 blog,记录自己如何使用 Mesos 以及一些体验。

测试架构

- mesos-master * 3

- mesos-slave * 3

- zookeeper

- framework: marathon, chronos

配置集群

配置 Zookeeper

由之前翻译的文章《以 Apache Mesos 计算的开源数据中心》中可以了解到,Mesos 本身没有 “HA” 功能,是通过 Zookeeper 来实现的。

-

在各个 master 节点上安装 Zookeeper:

$ wget http://ftp.jaist.ac.jp/pub/apache/zookeeper/stable/zookeeper-3.4.6.tar.gz # 解压 $ tar -zxf zookeeper-3.4.6.tar.gz注:

笔者当时测试时使用的时 zookeeper-3.4.6 版本,昨天刚刚发了 3.4.7 版本,而且是 stable 版本。

-

切换到

conf/目录下,修改zoo.cfg文件,添加 Mesos 的 master 信息如下:$ cd conf/ $ vim zoo.cfg ... # master server server.1=192.168.5.51:2888:3888 server.2=192.168.5.52:2888:3888 server.3=192.168.5.53:2888:3888 # 如果需要的话,可以修改 dataDir 到自己指定的目录 -

在

/etc/下新建目录,并配置各个 master 节点的 zookeeper id:# mkdir -p /etc/zookeeper/conf # vim /etc/zookeeper/conf/myid (分别写上 1、2、3,对应于上面的配置文件即可)

配置 Mesos Master

-

在各个节点的

/etc/下新建目录如下:# mkdir /etc/mesos /etc/mesos-master/ -

为 master 配置 zookeeper:

# vim /etc/mesos/zk zk://192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181/mesos # 在 /etc/mesos-master/ 目录下创建 hostname, ip 两个文件,分别写入本节点的 IP 地址,如(以 master1 节点为例): # vim /etc/mesos-master/hostname 192.168.5.51 # cp /etc/mesos-master/hostname /erc/mesos-master/ip # 在 /etc/mesos-master/ 目录下创建 quorum 文件,打开文件,在文件中写入 "2" # 完成该步骤后,目录结构如下: /etc/mesos `-- zk /etc/mesos-master |-- hostname |-- ip `-- quorum -

以上步骤分别在各个 master 节点上进行。

启动 Mesos 集群

启动 Zookeeper

直接执行启动脚本即可:

$ ./bin/zkServer.sh start-foreground # 在前台运行 zookeeper,如果是 "./bin/zkServer.sh start",则会在后台运行。

JMX enabled by default

Using config: /home/zookeeper-3.4.6/bin/../conf/zoo.cfg

2015-12-03 15:39:07,292 [myid:] - INFO [main:QuorumPeerConfig@103] - Reading configuration from: /home/zookeeper-3.4.6/bin/../conf/zoo.cfg

2015-12-03 15:39:07,296 [myid:] - INFO [main:QuorumPeerConfig@340] - Defaulting to majority quorums

2015-12-03 15:39:07,357 [myid:1] - INFO [main:DatadirCleanupManager@78] - autopurge.snapRetainCount set to 3

2015-12-03 15:39:07,357 [myid:1] - INFO [main:DatadirCleanupManager@79] - autopurge.purgeInterval set to 0

2015-12-03 15:39:07,358 [myid:1] - INFO [main:DatadirCleanupManager@101] - Purge task is not scheduled.

2015-12-03 15:39:07,397 [myid:1] - INFO [main:QuorumPeerMain@127] - Starting quorum peer

2015-12-03 15:39:07,418 [myid:1] - INFO [main:NIOServerCnxnFactory@94] - binding to port 0.0.0.0/0.0.0.0:2181

2015-12-03 15:39:07,455 [myid:1] - INFO [main:QuorumPeer@959] - tickTime set to 2000

2015-12-03 15:39:07,455 [myid:1] - INFO [main:QuorumPeer@979] - minSessionTimeout set to -1

2015-12-03 15:39:07,455 [myid:1] - INFO [main:QuorumPeer@990] - maxSessionTimeout set to -1

2015-12-03 15:39:07,455 [myid:1] - INFO [main:QuorumPeer@1005] - initLimit set to 10

2015-12-03 15:39:07,496 [myid:1] - INFO [main:FileSnap@83] - Reading snapshot /var/lib/zookeeper/version-2/snapshot.300000052

2015-12-03 15:39:07,537 [myid:1] - INFO [NIOServerCxn.Factory:0.0.0.0/0.0.0.0:2181:NIOServerCnxnFactory@197] - Accepted socket connection from /192.168.5.51:54456

2015-12-03 15:39:07,539 [myid:1] - INFO [Thread-1:QuorumCnxManager$Listener@504] - My election bind port: /192.168.5.51:3888

...

注:

三个节点上的 zookeeper 启动的间隔时间不宜过长,先启动的节点会等待剩下的节点启动,超时后 zookeeper 将启动失败。

启动 Mesos Master

可通过脚本或直接使用命令 mesos-master 启动:

$ mesos-master --work_dir=/var/lib/mesos/ --log_dir=/var/log/mesos/ --zk=zk://192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181/mesos --ip=192.168.5.51 --quorum=2

I1204 12:01:28.122519 13557 logging.cpp:172] INFO level logging started!

I1204 12:01:28.123446 13557 main.cpp:181] Build: 2015-08-31 16:41:23 by root

I1204 12:01:28.123462 13557 main.cpp:183] Version: 0.23.0

I1204 12:01:28.123664 13557 main.cpp:204] Using 'HierarchicalDRF' allocator

I1204 12:01:28.310770 13557 leveldb.cpp:176] Opened db in 186.691525ms

I1204 12:01:28.394034 13557 leveldb.cpp:183] Compacted db in 83.199278ms

I1204 12:01:28.394163 13557 leveldb.cpp:198] Created db iterator in 72331ns

I1204 12:01:28.394268 13557 leveldb.cpp:204] Seeked to beginning of db in 82154ns

I1204 12:01:28.394575 13557 leveldb.cpp:273] Iterated through 3 keys in the db in 286938ns

I1204 12:01:28.394697 13557 replica.cpp:744] Replica recovered with log positions 282625 -> 282626 with 0 holes and 0 unlearned

2015-12-04 12:01:28,396:13557(0x7f617e1c4700):ZOO_INFO@log_env@712: Client environment:zookeeper.version=zookeeper C client 3.4.5

2015-12-04 12:01:28,396:13557(0x7f617e1c4700):ZOO_INFO@log_env@716: Client environment:host.name=master1

2015-12-04 12:01:28,396:13557(0x7f617e1c4700):ZOO_INFO@log_env@723: Client environment:os.name=Linux

2015-12-04 12:01:28,396:13557(0x7f617e1c4700):ZOO_INFO@log_env@724: Client environment:os.arch=2.6.32-504.30.3.el6.x86_64

2015-12-04 12:01:28,396:13557(0x7f617e1c4700):ZOO_INFO@log_env@725: Client environment:os.version=#1 SMP Wed Jul 15 10:13:09 UTC 2015

2015-12-04 12:01:28,397:13557(0x7f617e1c4700):ZOO_INFO@log_env@733: Client environment:user.name=root

2015-12-04 12:01:28,397:13557(0x7f617e1c4700):ZOO_INFO@log_env@741: Client environment:user.home=/root

2015-12-04 12:01:28,397:13557(0x7f617e1c4700):ZOO_INFO@log_env@753: Client environment:user.dir=/home/mesos-0.23.0/build/bin

2015-12-04 12:01:28,397:13557(0x7f617e1c4700):ZOO_INFO@zookeeper_init@786: Initiating client connection, host=192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181 sessionTimeout=10000 watche

r=0x7f6181b67ba9 sessionId=0 sessionPasswd=<null> context=0x7f616c001170 flags=0

...

# 与上述启动 zookeeper 相同,所有 Master 启动的间隔时间不宜过长,等待所有的 Master 节点启动后,打印日志如下,组成了一个 Zookeeper 集群:

I1204 12:05:36.163465 13562 network.hpp:415] ZooKeeper group memberships changed

I1204 12:05:36.163709 13565 group.cpp:656] Trying to get '/mesos/log_replicas/0000000014' in ZooKeeper

I1204 12:05:36.164984 13565 group.cpp:656] Trying to get '/mesos/log_replicas/0000000015' in ZooKeeper

I1204 12:05:36.165647 13565 group.cpp:656] Trying to get '/mesos/log_replicas/0000000016' in ZooKeeper

I1204 12:05:36.166848 13560 network.hpp:463] ZooKeeper group PIDs: { log-replica(1)@192.168.5.51:5050, log-replica(1)@192.168.5.52:5050, log-replica(1)@192.168.5.53:5050 }

Master 集群启动成功。

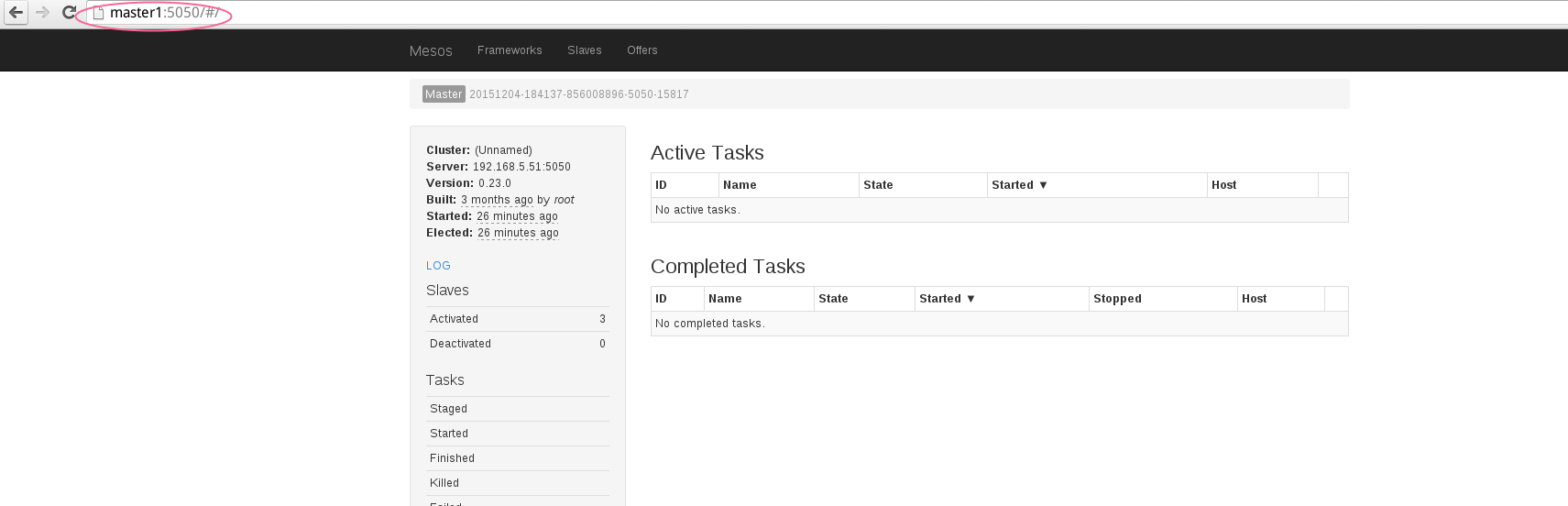

可以打开 web 页面查看。

说明:

- 设置

--quorum=2的意思是:至少要有 2 台 master 节点在线,否则集群无法起来,所以至少要两台 master 一起启动。

启动 Mesos Slave

与启动 Master 相同,可以使用脚本也可以直接使用 mesos-slave 命令启动:

$ mesos-slave --log_dir=/var/log/mesos --master=zk://192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181/mesos --hostname=slave1

I1204 14:07:06.030362 6460 logging.cpp:172] INFO level logging started!

I1204 14:07:06.079773 6460 main.cpp:162] Build: 2015-08-31 16:41:23 by root

I1204 14:07:06.079802 6460 main.cpp:164] Version: 0.23.0

I1204 14:07:06.116693 6460 containerizer.cpp:111] Using isolation: posix/cpu,posix/mem

2015-12-04 14:07:06,151:6460(0x7f6b74772700):ZOO_INFO@log_env@712: Client environment:zookeeper.version=zookeeper C client 3.4.5

2015-12-04 14:07:06,151:6460(0x7f6b74772700):ZOO_INFO@log_env@716: Client environment:host.name=slave1

2015-12-04 14:07:06,151:6460(0x7f6b74772700):ZOO_INFO@log_env@723: Client environment:os.name=Linux

2015-12-04 14:07:06,151:6460(0x7f6b74772700):ZOO_INFO@log_env@724: Client environment:os.arch=2.6.32-504.30.3.el6.x86_64

2015-12-04 14:07:06,151:6460(0x7f6b74772700):ZOO_INFO@log_env@725: Client environment:os.version=#1 SMP Wed Jul 15 10:13:09 UTC 2015

2015-12-04 14:07:06,158:6460(0x7f6b74772700):ZOO_INFO@log_env@733: Client environment:user.name=root

2015-12-04 14:07:06,158:6460(0x7f6b74772700):ZOO_INFO@log_env@741: Client environment:user.home=/root

2015-12-04 14:07:06,158:6460(0x7f6b74772700):ZOO_INFO@log_env@753: Client environment:user.dir=/home/mesos-0.23.0/build/bin

2015-12-04 14:07:06,158:6460(0x7f6b74772700):ZOO_INFO@zookeeper_init@786: Initiating client connection, host=192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181 sessionTimeout=10000 watcher

=0x7f6b78b16ba9 sessionId=0 sessionPasswd=<null> context=0x7f6b48000a70 flags=0

I1204 14:07:06.163914 6460 main.cpp:249] Starting Mesos slave

I1204 14:07:06.170938 6468 slave.cpp:190] Slave started on 1)@192.168.5.54:5051

...

依次启动 slave,可以在 web 上看到各个 slave 节点的信息。

配置和运行 Marathon 框架

-

在

/etc/下新建目录,并创建配置文件,如下:# mkdir -p /etc/marathon/conf # touch /etc/marathon/conf/hostname /etc/marathon/conf/master /etc/marathon/zk -

编辑文件进行配置:

# vim /etc/marathon/conf/hostname 192.168.5.51 # vim /etc/marathon/conf/master zk://192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181/mesos # vim /etc/marathon/zk zk://192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181/marathon说明:

笔者虚拟机有限,所以框架运行在 Master 上了。

-

运行 Marathon 框架:

./bin/start --master zk://192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181/mesos --zk zk://192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181/marathon --framework_name marathon1 --hostname marathon1 MESOS_NATIVE_JAVA_LIBRARY is not set. Searching in /usr/lib /usr/local/lib. MESOS_NATIVE_LIBRARY, MESOS_NATIVE_JAVA_LIBRARY set to '/usr/local/lib/libmesos.so' [2015-12-04 14:18:33,166] INFO Starting Marathon 0.10.0 (mesosphere.marathon.Main$:87) ... [2015-12-04 14:19:36,511] INFO Launched 0 tasks on 0 offers, declining 1 (mesosphere.marathon.tasks.IterativeOfferMatcher$:241) [2015-12-04 14:19:36,512] INFO Offer value: "20151204-120535-872786112-5050-32763-O124" rescinded (mesosphere.marathon.MarathonScheduler$$EnhancerByGuice$$851feae6:93) [2015-12-04 14:19:41,423] INFO started processing 1 offers, launching at most 1 tasks per offer and 1000 tasks in total (mesosphere.marathon.tasks.IterativeOfferMatcher$:132) [2015-12-04 14:19:41,425] INFO Offer [20151204-120535-872786112-5050-32763-O126]. Decline with default filter refuseSeconds (use --decline_offer_duration to configure) (mesosphere.marathon.tasks.IterativeOfferMatcher$:231) -

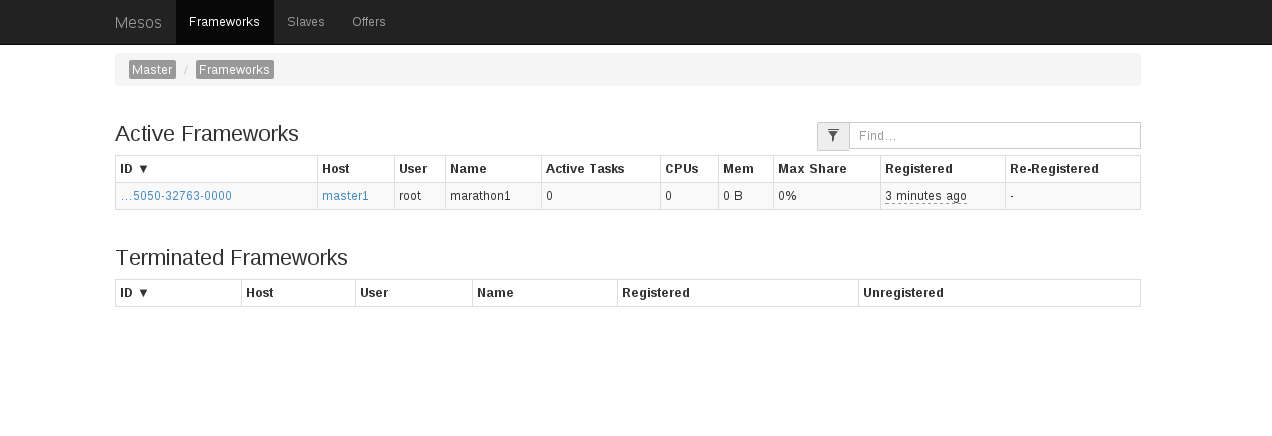

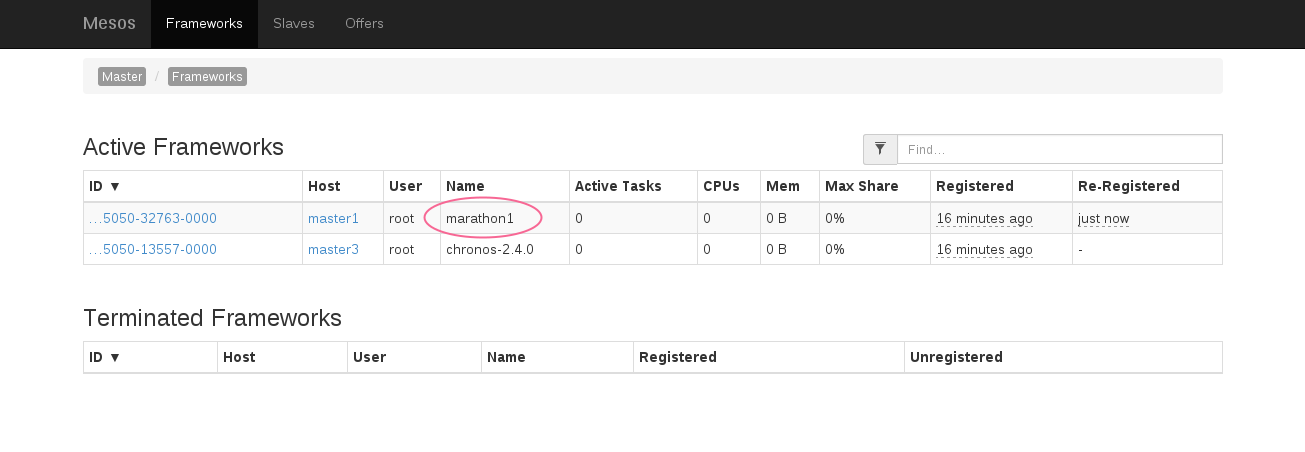

在 web 上可以看到添加的 marathon 框架:

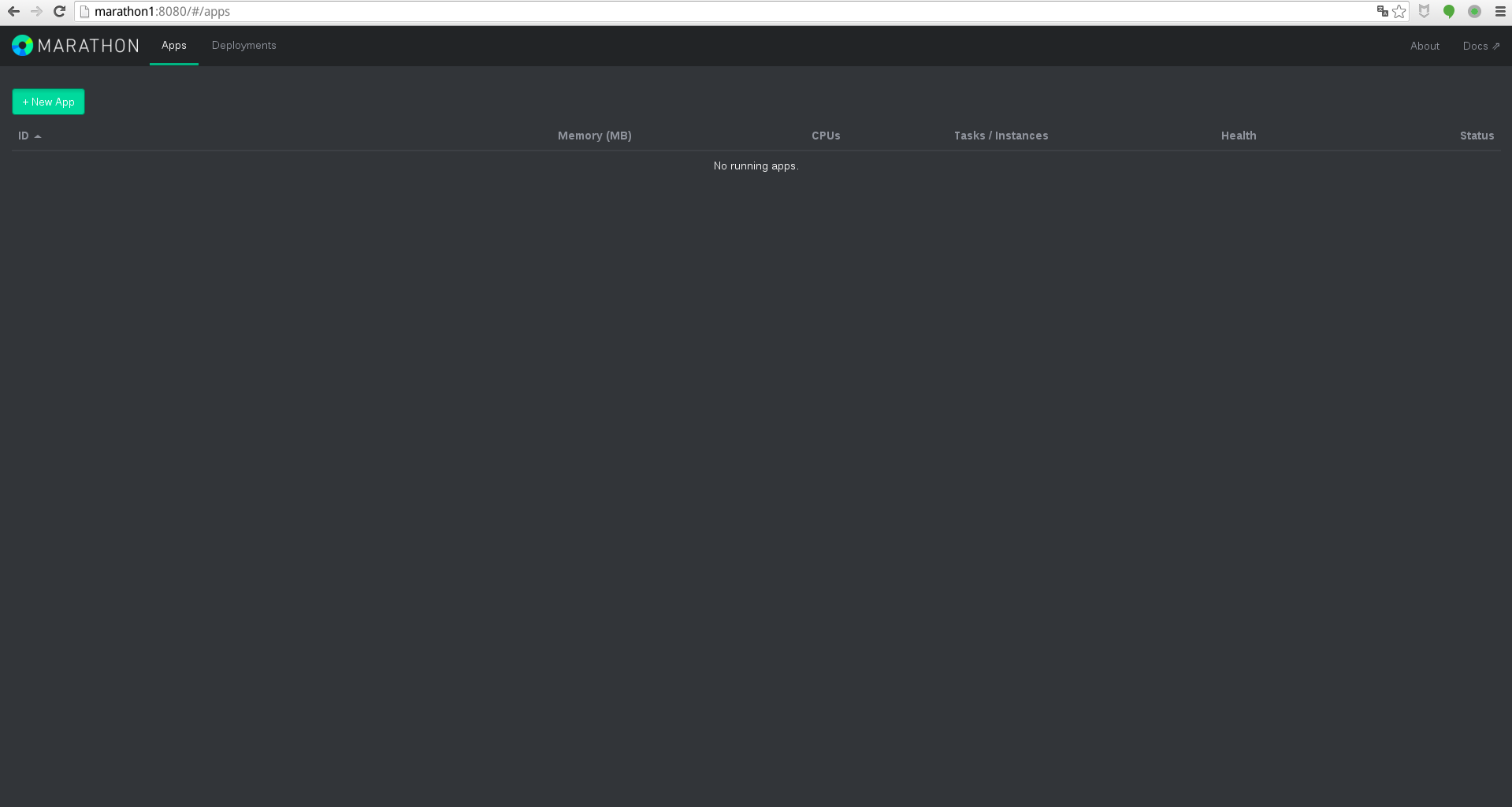

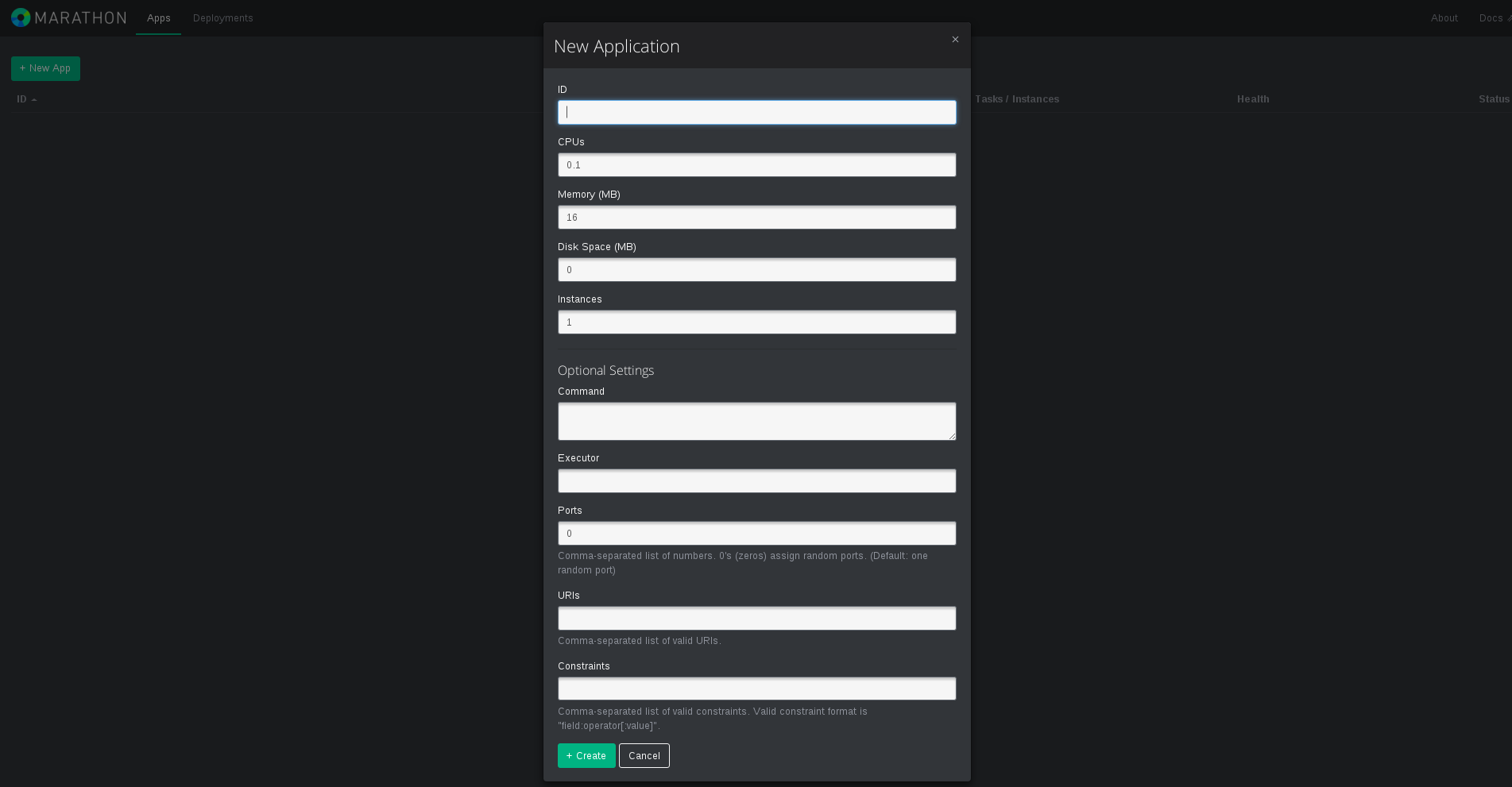

Marathon 的界面是这样子的:

配置和运行 Chronos 框架

- 安装 Chronos:

yum install chronos; - 安装好后,chronos 的配置文件在

/etc/chronos/conf/目录下,只有一个http_port文件,默认端口是 4400; -

启动 chronos:

# initctl start chronos # ps -ef | grep chronos root 2312 1 83 18:57 ? 00:00:04 java -Djava.library.path=/usr/local/lib:/usr/lib64:/usr/lib -Djava.util.logging.SimpleFormatter.format=%2$s %5$s%6$s%n -Xmx512m -cp /usr/bin/chronos org.apache.mesos.chronos.scheduler.Main --zk_hosts 192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181 --master zk://192.168.5.51:2181,192.168.5.52:2181,192.168.5.53:2181/mesos --http_port 4400 -

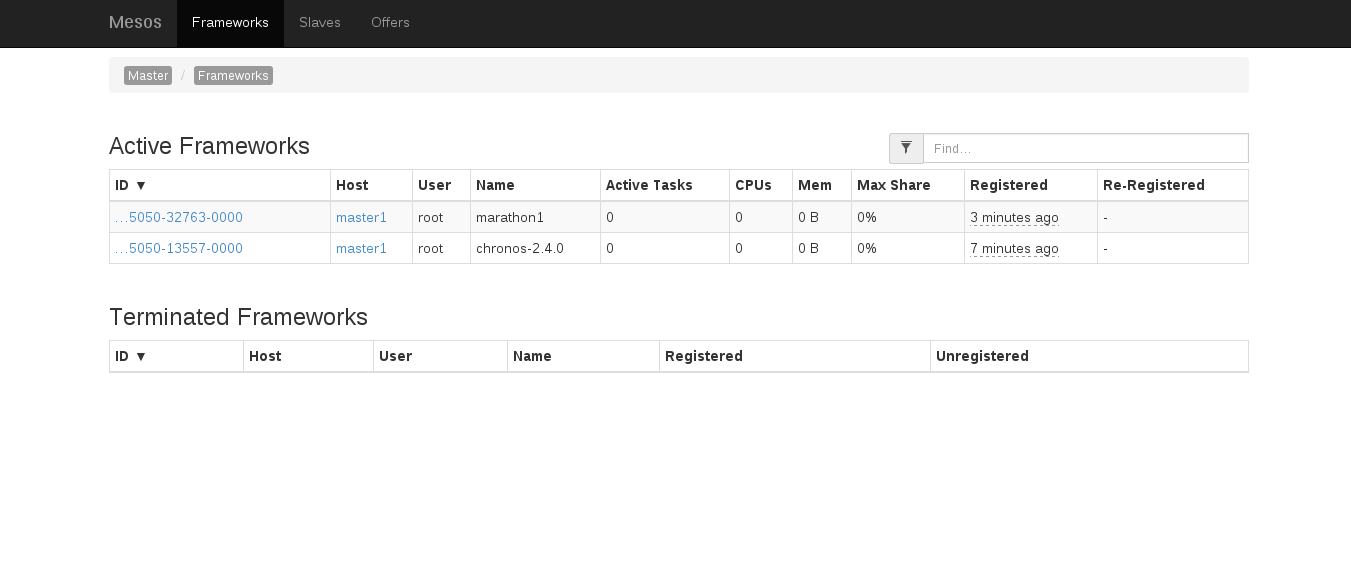

在 web 上可以看到新增的 chronos 框架:

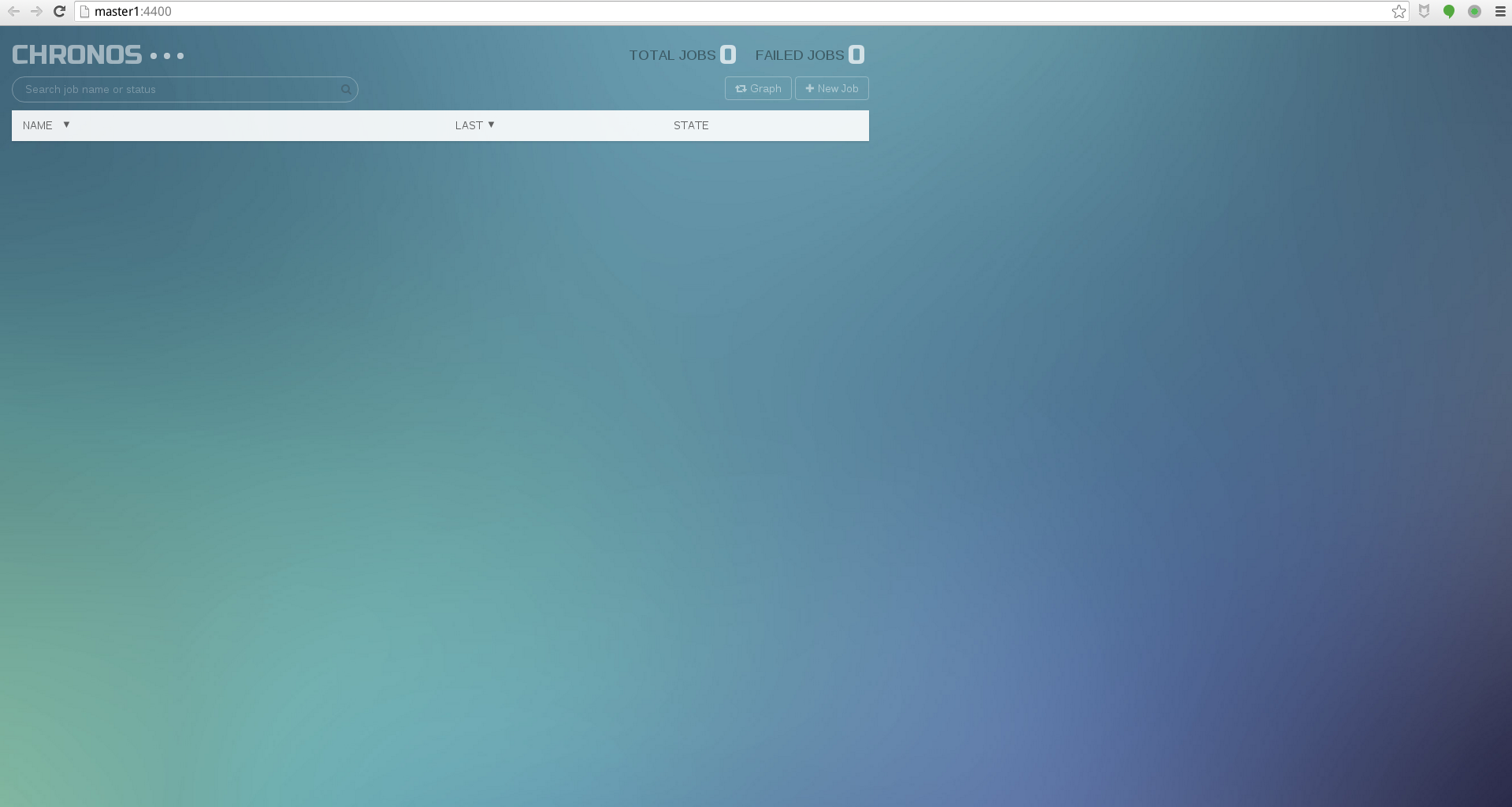

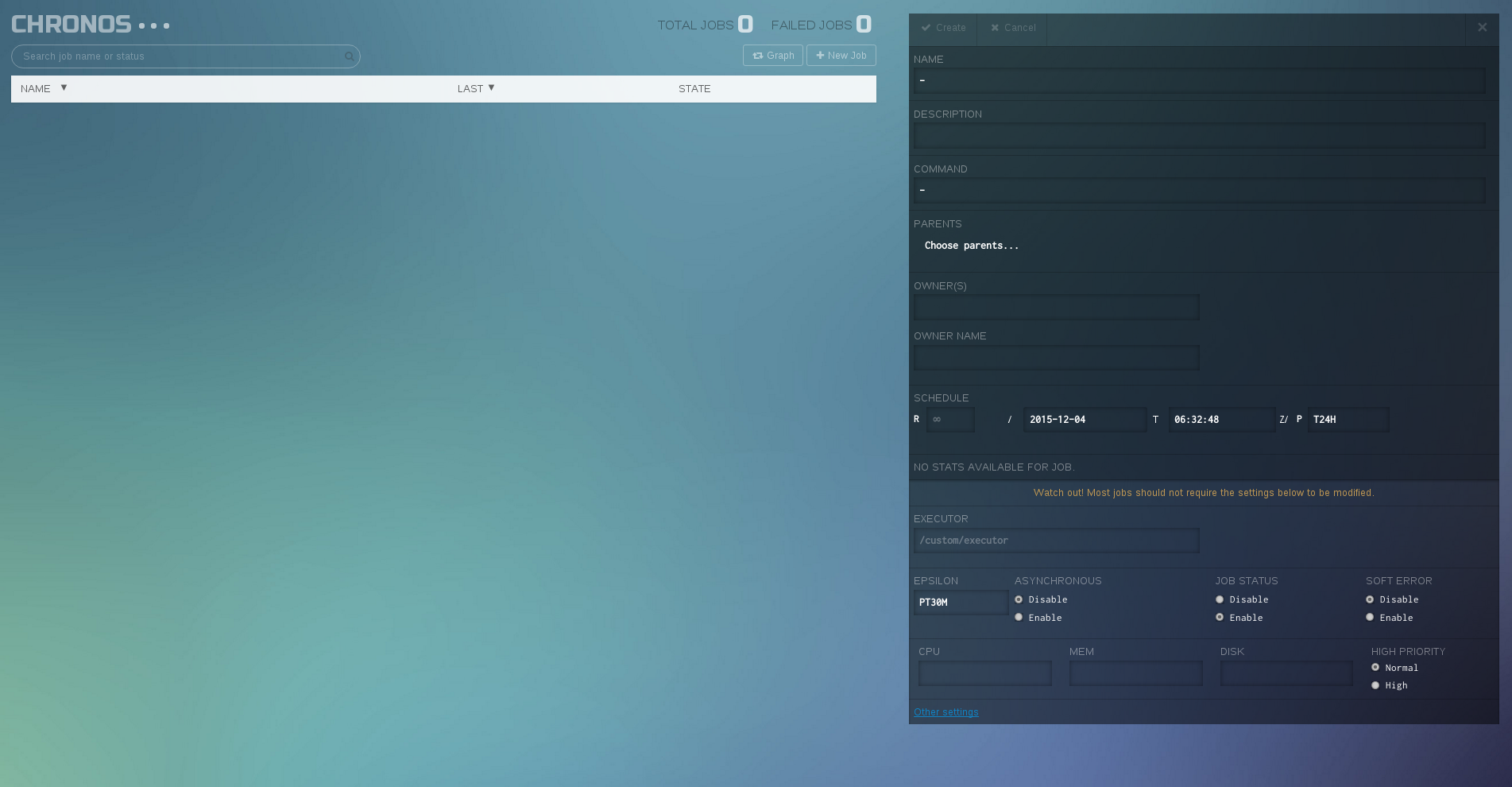

Chronos 界面是这样的:

测试 Mesos 的高可用

不知道在这里用”高可用“一词来描述该特性是否合适。

测试 master 的高可用

-

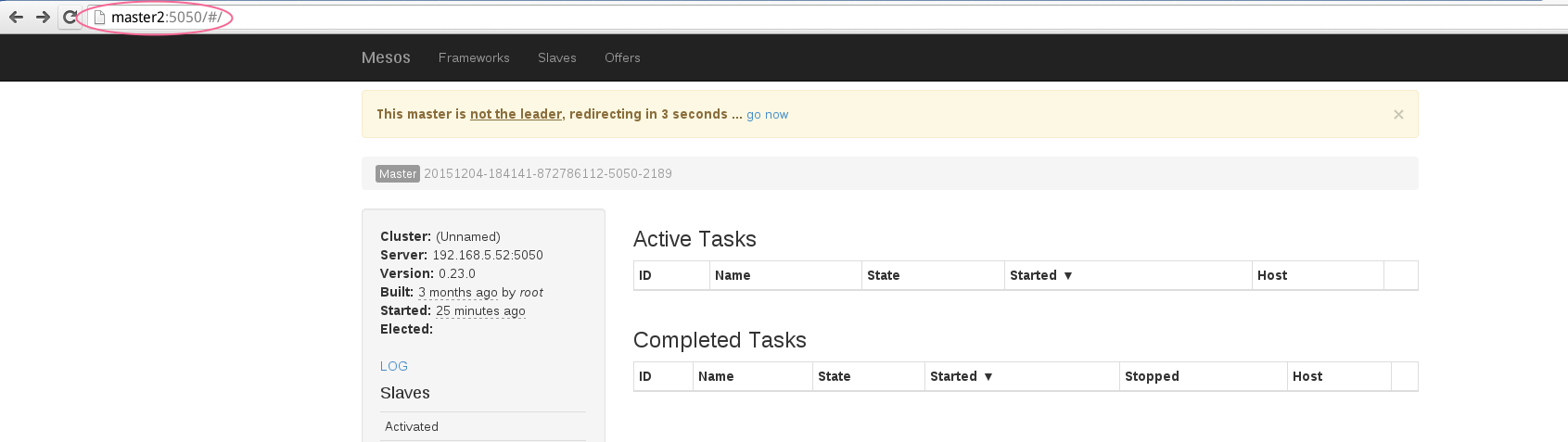

选择非 leader 的 Master,在 web 上打开页面,将看到如下提示,随后会自动跳转到 leader 节点上: 打开 master2:5050 后自动跳转到 master2:5050

-

同理,如果一台 master 不幸身亡了,集群还是会继续工作的。

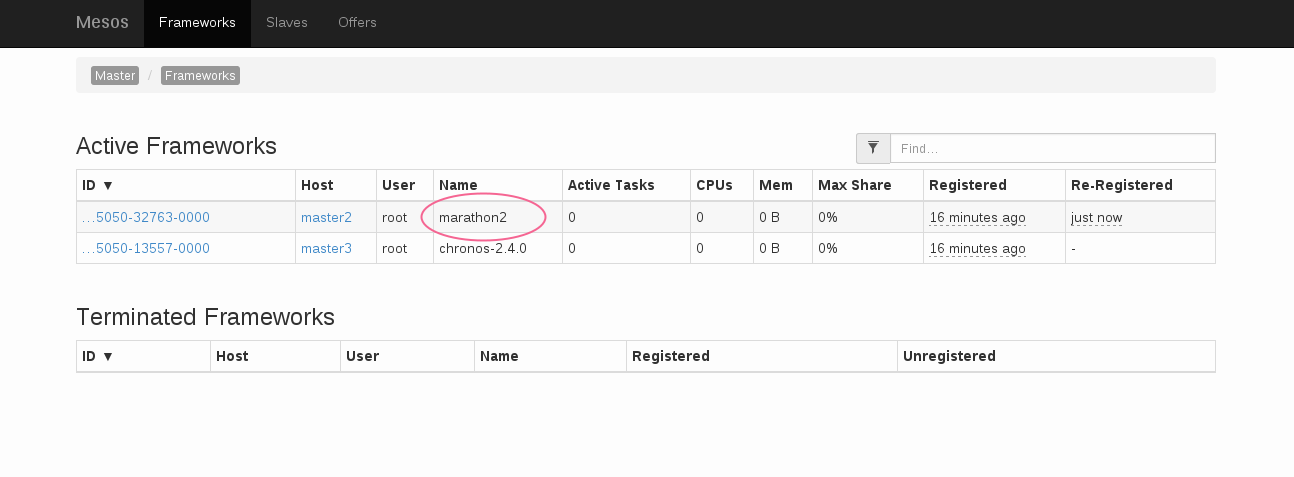

测试 framework 的高可用

- 停止其中一个框架,如 “marathon1”;

-

在 web 上刷新 Frameworks 标签的信息,看到 framework 的信息有所变化,运行的框架为 “marathon2”。

今天的记录就到这里,还有很多问题需要深入研究。

到现在为止,也只是运行了几个框架,还没有实际运行任务。敬请期待下个内容 :-)